Whatsapp CRM

This is not a C# nor a Blazor guide, in any means, it’s just a failed project overview.

This was supposed to be a personal CRM, a SAAS for my use and which I would also sell, at least in Brazil Whatsapp is the default communication tool so for me it would make sense to create a project integrated with it, after some brainstorming about how to display the data and what was supposed to be the main functionalities of the project, I was disappointed to find, while I built the MVP, that Whatsapp has no easy way to generate a backup of any readable kind from all the contacts, you can only backup a user or a group each time, and with a limit of around 40 thousand messages so there is also a great chance that big old groups would be lost in the manual backup, and also it doesn’t link the media messages to its correct location, media are exported as «media-type»-date-WAxxxx, so I could only know if the message is from that day, and not its correct location in the chat.

The Whatsapp backup which is usually created daily at mid dawn can only be used by the Whatsapp app. Even Instagram backup allow one to download every single message in JSON, well, Whatsapp does not have persistent online storage, so yeah, I can understand the lack of a full backup functionality, but can’t understand why we use Whatsapp so much … well, I guess this is a rant for another day.

There is also a hacky way to access every chat, using an old android version in an emulator, to get the Whatsapp encryption key and decrypt the whole database, a friend of mine even managed to do it, sending me a .html with some hundred of thousands of messages of a group, in which I created a parser to parse it to .json, this is the reason you will find a function to deal with .json files in the services code, besides the .txt, but there is no way to built a reliable service using a hack or without an official tool for it.

So I would say that no one would like to generate the backup of all the contacts which were sent a message in each week or so, even more, when my target audience would be power users who use Whatsapp a lot, so yeah, the project was dead, RIP my SAAS.

First, this show how important is an MVP, just try to build the basic functionality of any project you want to, before dealing with the advanced stuff, I could have lost a lot of hours if I tried to work in some of the charts which were supposed to be in the final product.

Well, since I had already some of the back-end ready, I decided to build it a little more, so I could also take a look at the Blazor server. I was already looking to use Blazor Server in some test project, I just had no idea what to build.

Blazor

Blazor is the new Microsoft framework for the front-end, there are two flavors, Blazor Server, which keeps all the code in a remote server and communicates with SignalR to the user device, and Blazor WebAssembly, here I used Blazor Server.

My worst complaint about it is Intellisense, at least in VsCode, it stops and crashes all the time, it works so well with every other Microsoft language, but not here, not yet. For a small project like this one I could even accept that it’s not a huge deal, but it’s still annoying, for a huge project, with a lot of people working on it… well, better to keep yourself informed in Github, like in here. And also, no formatter, yet.

And yeah, about Microsoft languages, there is Typescript, with amazing Intellisense, one of the selling points of Blazor is that it uses C# instead of Javascript, but since every major front-end framework (Angular, React, Vue) of today has Typescript support I can’t really see it as a selling point, I worked with C# and Vb.NET before I tried Typescript, and once I tried, it felt like an old friend, I really think it’s really easy to understand if you have some C# background, you will likely spend more time learning the framework itself.

What I really liked about Blazor was the possibility to unify the front and back classes easily, having a single “Folder” model class and being able to just do something like below to parse the data is really simple.

|

|

The bi-directional state was also simple to deal with, I had a component_X that uploaded the .txt file, and I needed to notify when the upload finished updating the state of the component which owned this component_X.

|

|

So I just injected the NewUploadService in the component_x and once I had an answer from the API:

|

|

So the father component would update. But still, It’s not as simple as having single storage like re-frame.

If you are still interested in Blazor that a look at this Microsoft Guide, so you can compare Blazor Server with WASM.

If you try to run the app, keep in mind that I didn’t try to make it responsive at all, if you resize it things will get ugly, and it’s still using the almost default Bootstrap theme.

ASP.NET Core

Nothing really new here, as long as you aren’t of those who still say “C# is Windows only”, just declared all the controller endpoints needed, and passed the requests to the services (or repository?) layer and dealing with the database through EF Core.

I would argue that there is almost no Bussiness Logic in this app, at least in the current state, the only BL is that in the system should be only one message from the same user, in a folder, considering the time and message content and the user. Now there is an issue, a file could have around 40 thousand messages, so to add a single file to the database I would need to do up to 40 thousand trips to the database, performance was horrible when I tried to add the .json file with hundreds of thousands of messages, even with a local database.

So instead of doing all those trips, I decided to get every message of the folder and check it locally, parsing it to a hash table, and doing the comparison, performance was fine now.

|

|

Although a new issue was introduced, there was no more guarantee that there would be no duplicated message, a user could upload two files at, almost, the same time, they would both start to be processed and since I decided that my code only queries the database once and at the start of the method both would get the database in a state before the other file was processed, this must be avoided.

So a Semaphore was added, for simplicity, I only allowed one insert process at a time, but if it would be a major system the implementation would need to be a little different, since I only must guarantee no duplicate in a folder, if a user wanted to have multiple folders with the same chats there should be no problem, so I could also define an method to check if that FolderId was already in process and wait until it finishes, it could be in memory, or if I planned to deploy it as a microservice I could create a service to orchestrate it, so I could have multiple files being read at once, as long as they are not the same Folder.

But, we arrived at another but, imagine you upload 10 files quickly, the second one is still processing and for some reason, the system crashes, you would need to upload the 9 files again. That ain’t good for your user. So instead of reading the files directly from the Controller endpoint, I added another step, a queue, but in PostgreSQL.

Instead of receiving the file and processing it, the system now receives the files and save it as is in the database, it’s much faster doing this, so you could upload 10 files, they would be saved quickly, the system can crash processing the first one and once it would be back, the 10 files would still be there to be processed, since now my “AddChatToFolder” function read from my database.

I haven’t bothered to implement a method to check if files are waiting to be processed at system startup, but you get the idea.

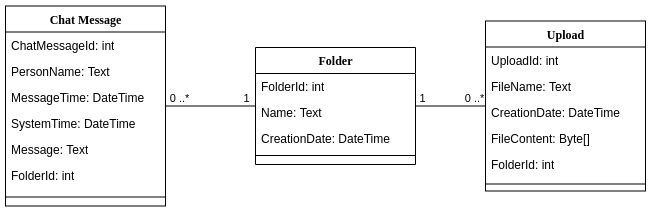

My final classes were the ones below.

See that there is no relationship between the PersonName outside each containing folder, my original idea was to get the PersonName and a PersonPhoneNumber and link them in all the Folders, relate data, check when your last contact with that person happened, remind you to contact him…

I also had no tests in the Project, the main one would be an integration test to check if no duplicates messages are being added, so if you plan to use this code think about it.

Docker and Docker-Compose

This project uses docker-compose to orchestrate the creation of the system, it’s only a matter of docker-compose up and you should be fine, I’m also using multi-stage dockerfiles to create smaller final dockers, with around 200 MB and some environment variables.

Diagrams of a microservice

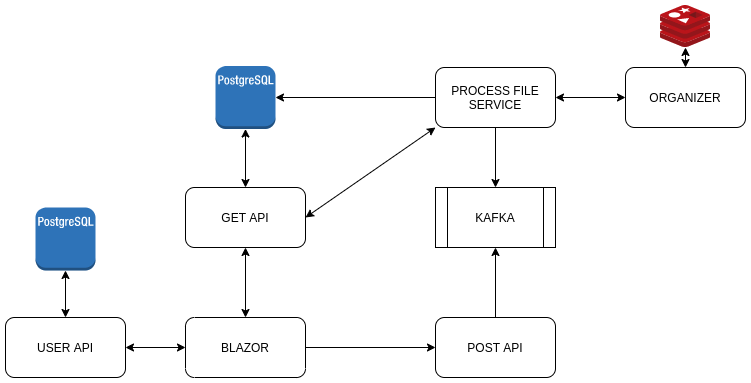

In an ideal world, with thousands of happy users paying me, I think I would end up with something like the system below, since the monolith design of the current back-end system wouldn’t be easy to scale.

Blazor would be the user entry point.

USER API would deal with User Registration, Data, and Authentication, with JWT, and there is also a third-party payment provider that is not in there.

Every backup file would go to the POST API, if the user were authenticated then it would be sent to Kafka (or any other queue) and would wait there until the PROCESS FILE SERVICE consume it.

Once the PROCESS FILE SERVICE picked it, it would need to ask the ORGANIZER service to confirm if that user was already processing something, and as soon as it was allowed to, it would process that file. This service would also need to query all the messages from that user and folder to avoid those duplicated messages. Keep in mind that I’m not querying the database here, I’m asking the GET API service to deliver those messages to my service. But the PROCESS FILE SERVICE would write the allowed messages directly to the Messages database.

And finally, the GET API that would deliver data to the Blazor front-end and also to the PROCESS FILE SERVICE.

Some interesting thoughts I had while drawing this diagram is that I would expect the users to upload files only once a week or so, but use the app all the time, maybe the PROCESS FILE SERVICE could be only an Azure Function or AWS Lamba.

Conclusion

I hope you learned something here or in the Github code, I have no real intention to develop anything else about this project, unfortunately, this wasn’t my million dollar project, but still was cool to make and don’t forget to try to build an MVP before anything else. And If you need anything Blazor or C# related, feel free to reach me in any of the social networks at the top of the page.